ffective AI governance is crucial for translating ethical principles and regulatory requirements into actionable organizational practices.

NIST AI Risk Management Framework (AI RMF)

Before we get into the details of an operational governance framework, it is good to review the risk management approach proposed by the US National Institute of Standards and Technology (NIST). The AI Risk Management Framework (RMF V1.0) is a thorough yet flexible cross-sector framework designed to help organizations manage AI risks and promote trustworthy AI. The NIST framework emphasizes trustworthiness attributes like validity, reliability, safety, security, accountability, transparency, explainability, privacy enhancement, and fairness. It is particularly relevant to the life sciences due to its risk-based approach, support for traceability, and alignment with good machine learning practices.

The AI RMF is structured around four core functions:

- Govern: Establish policies, roles, and accountability for AI risk management. Establish AI Ethics Committees, define roles and responsibilities for managing AI risks.

- Map: Identify and analyze risks such as algorithmic bias in patient data or inaccuracies in diagnostic tools; analyze impact on patient safety, data privacy, and regulatory compliance.

- Measure: Assess, develop metrics and tools, and monitor AI risks. Develop metrics for AI performance (e.g., accuracy in medical imaging) and monitor for unintended consequences.

- Manage: Implement strategies to mitigate risks and improve AI trustworthiness such as regular audits, validation of training data sets, ensuring test data reflects real-world scenarios, etc.

Addressing Bias and Discrimination in AI Applications

We propose the following strategies to build trustworthy AI systems that deliver fairness and equity:

- Diverse and Representative Data: Use data sets reflecting a wide range of demographics, regularly auditing and updating training data to ensure inclusivity and separating test data from training data.

- Human Oversight: Involve domain experts to review AI outputs and establish AI Ethics Committees.

- Algorithm Design: Implement fairness constraints and use explainable AI techniques.

- Continuous Monitoring: Monitor performance post-deployment to identify and correct unintended biases and use feedback loops.

- Bias Detection and Mitigation: Conduct regular bias audits and address issues around fairness and bias.

- Stakeholder Engagement: Collaborate with diverse groups and communicate transparently about AI operations and limitations.

- Regulatory Compliance: Align with global AI regulations and ethical guidelines.

Proposed Framework for AI Governance

A comprehensive AI governance framework should operationalize governance across the organization, tailored to support responsible, secure, trustworthy AI solutions. The framework for AI Governance proposed below is adapted from the above AI RMF V1.0 and other governance proposals:

- Organization: Establish an AI Center of Excellence (CoE), steering committee, governance team, and multidisciplinary stakeholders, especially subject matter experts in various functional areas. Define reporting structures, accountability, and escalation paths.

- Operating Model: Define how governance is executed, including processes, procedures, communications, and integration points across teams. Define a designated owner for each process/AI application with clear scope, documented goals and objectives, and roles and responsibilities.

- Risk & Compliance: Assess AI risk at each lifecycle stage and implement mitigation plans, asking “what could go wrong?” (e.g., hallucinations, misuse) and “how could we detect and respond?” Ensure that risks are documented, technical and procedural controls are in place, and stakeholder approvals are obtained before production deployment.

- Policies, Procedures, & Standards: Develop overarching AI policies and update existing SOPs to reflect AI-specific considerations. Apply data governance policies to ensure privacy and compliance with regulations such as the Health Insurance Portability and Accountability Act (HIPAA) and General Data Protection Regulation (GDPR).

- Model Governance: Ensure that models are appropriate for the question of interest and context of use. Define standards for model development, validation, and monitoring, distinguishing between procedural management and formal validation for high-risk applications.

- Tools & Technologies: Leverage tools for explainability, bias detection, and auditability to support compliance and trust.

- Monitoring: Establish continuous monitoring processes to ensure that AI systems perform as intended and adapt to changes in data or context, with defined triggers for retraining or rollback. Fine-tune the model, retrain with new data, and deploy into production.

Governance is a continuous process, ensuring that solutions are innovative, safe, compliant, and aligned with organizational values and ethical AI principles.

Conclusion

Increased AI adoption in life sciences needs to be balanced with evolving regulatory and ethical considerations. The following are the key takeaways from this introductory overview on AI ethics, regulations, and governance for life sciences:

- AI is transformative: AI is fundamentally changing ways of working across life sciences, offering immense potential for efficiency and improved patient outcomes.

- Fast-paced evolution: AI tools and technologies are emerging at an unprecedented rate, often outpacing regulatory development.

- Evolving regulatory landscape: Many regulatory guidelines and frameworks are still in draft stages, but they are continuously evolving towards greater harmonization across global health authorities.

- Trustworthy and responsible AI is paramount: Ensuring appropriate and ethical use of AI is critical. This requires mapping out how AI principles apply to specific functional areas and use cases (e.g., clinical trials, pharmacovigilance).

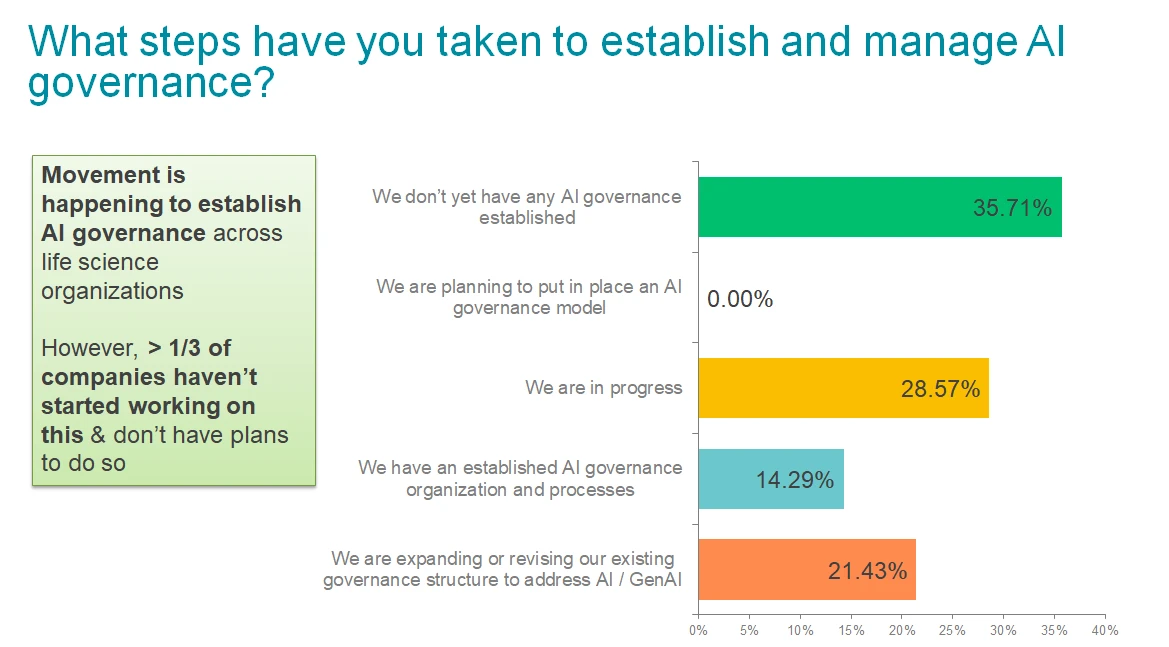

- Focus on governance: Organizations must prioritize defining, implementing, and continuously revising AI governance frameworks. This includes addressing people, process, data, and technology aspects.

- Leverage existing frameworks: Organizations do not need to reinvent the wheel. Frameworks like the NIST AI RMF provide a robust foundation that can be extended and tailored to specific organizational needs and use cases.

- Continuous monitoring: Staying abreast of changes in guidelines and regulations is vital for ongoing compliance and responsible innovation.

- Stakeholder engagement and training: Broad organizational alignment is essential. All stakeholders, from developers to end users and leadership, must be trained on ethical AI principles, responsible use, and the implications of AI in their work. This includes understanding potential biases, hallucinations, and the importance of verifying AI-generated outputs.

- Data quality is foundational: The effectiveness and reliability of AI systems are directly tied to the quality of the data they are trained on. Robust data governance and quality-assurance processes are key to the success of any AI implementation.

- Human in the loop: All applications, especially in GxP environments, require human oversight for validation of process, output, error correction, and ensuring accountability. This balance between automation and human judgment is key to building confidence in AI solutions.

While the complexities of AI ethics, regulations, and governance are significant, a proactive, integrated, continuously adaptive approach will enable life sciences organizations to harness AI’s full potential responsibly, delivering value to patients and maintaining public trust. We recommend starting with the proposed governance framework followed by frequent reviews and process improvements as AI adoption increases within your enterprise.